The need to maintain good quality operational data is often well understood by organisations. The guiding principles that govern “good” data practices have been around for decades and the technology to support them has progressed leaps and bounds in recent years.

So, if we have the information and tools to succeed, why do so many organisations still fall short?

Historically, data quality programs could be prohibitively expensive as organisations needed to align internal systems, processes, roles, and responsibilities to build a holistic data quality strategy that garners results. The work itself could be time-consuming and manual in nature, meaning data quality was primarily the focus of enterprise-grade businesses.

Today, through the power of cloud-hosted technology, this is changing. Data quality teams can self-serve and become less reliant on IT, they can simply automate repetitive tasks or build data quality rules. In addition to this the flexible hosting options in the Cloud lowers total cost of ownership – improving accessibility for a wider range of organisations.

In this article we’ll explore the causes of poor-quality data, its impact if ignored, why it’s important to tackle it head on and how technology is changing the landscape to make data quality more accessible, impactful and affordable.

What is operational data quality?

Operational data is any piece of data in your organisation that is used to perform an operational task. Operational data quality refers to the business practices, software tools and techniques for maintaining the quality of operational data.

For example, the work the Marketing team does to “clean” an email list before a marketing campaign, or the work business analysts do to analyse data errors and map journeys in a CRM before a system migration.

How does operational data go bad?

Operational data in an organisation can deteriorate or “go bad” for various reasons, leading to broader downstream issues. Understanding these reasons is crucial for effectively managing and maintaining data quality. Here are some common ways operational data can degrade:

- Human data entry errors

- Outdated information that lies undiscovered and uncorrected

- Incomplete or missing system data

- Duplicate data (very common!)

- Data integration issues when merging multiple systems

- Lack of data governance practices

- Data security issues or inadequate access controls

- General lack of focus on data quality processes

- Poor matching routines when ‘new’ data is loaded

To address these challenges and prevent operational data from going bad, organisations should implement data quality management practices. It’s essential to recognise that data quality is not a one-time task but an ongoing process that requires attention and resources to maintain over time.

Making operational data quality as accessible and easy to deliver as possible is therefore critical.

Why is operational data quality so important?

Ensuring high data quality is essential for organisations because it offers numerous benefits. Organisations can improve decision making with accurate data. They can boost productivity and improve their customer relationships. They can also lower operational costs and forecast more accurately.

Many of Experian’s customers are businesses in regulated industries. Sound data quality practices are also crucial as part of a robust and structured strategy to maintain regulatory compliance.

And yet, historically, organisations have struggled to get to grips with data quality. Common challenges include:

- Budget – is there sufficient cash available to fund activities?

- Backing – is operational data quality a Board-level priority?

- Talent – does the organisation have the requisite skills to succeed?

- Infrastructure – is the organisation setup to succeed?

But, what if there was a way you could automate your data quality activities, remove reliance on IT and lower cost of operations?

How Experian makes operational data quality more accessible, impactful, and affordable

Aperture Data Studio from Experian is a data quality platform designed to help you tackle your most pressing data quality challenges, from gaining a single customer view to assisting with data migrations and enhancing accuracy in compliance reporting.

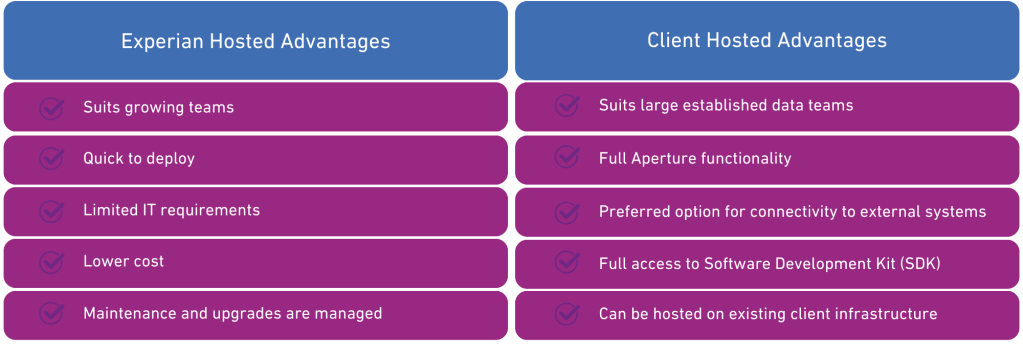

Aperture Data Studio is available in 2 deployment options: Experian-hosted and Client-hosted versions. It is designed specifically to empower data quality teams and remove over-reliance on IT – saving time and money.

You no longer need to worry about infrastructure, servers, or your budget, as all this is solved within Experian’s hosted Aperture Data Studio solution.

With Experian-hosted Aperture Data Studio you can:

- Perform interactive data profiling analysis – Profile full volumes of data, at real-time speed, in a business-friendly interface

- Build your own (or use our) Data Quality rules – Create, refine and deploy your business rules with a click of a button

- Cleanse your contact data – Ensure address, email and phone data is accurate, complete, and valid for use in sales, marketing and operations

- Transform & standardise your operational data – Keep your data assets consistent and up-to-date

- Build your own workflows – Drag and drop functionality unlocks data quality automation

If you are struggling with the accuracy of your operational data, and budget, resource, or Board backing are an issue, then talk to the Experian Team today about Experian-Hosted Aperture Data Studio.