Robust data governance is a strategic necessity for organisations that rely on data. However, the challenge for many organisations is where and how to start.

Successful data governance depends on data quality management, to ensure that the data is accurate, reliable and fit for purpose.

The starting point: Assessing your data governance maturity

Experian has created a short survey to assess your organisation’s internal data governance capabilities against industry best practices. The survey results will help identify your organisation’s current stage within a structured data governance maturity model.

This model outlines specific criteria across key areas such as data quality, stewardship, and governance processes. Based on your results, Experian can provide tailored recommendations to help you progress from foundational improvements to strategic initiatives that deliver measurable outcomes.

The cost of poor data quality

Many business users are often dissatisfied with the quality of their data in core information systems such as CRMs, BI (business intelligence) reports, data lakehouses and not being able to trust the quality of the data to make decisions. With poor and unmanaged data quality, this not only negatively impacts revenue generating opportunities, customer satisfaction and service quality, but also heightens data risks within your organisation.

With the advent of AI, data governance is starting to take a more prominent position within an organisation’s priorities. Forty-six percent of organisations are not confident with their ability to manage data risk.1 This goes some way to explaining why a high number of AI projects fail. They are destined for the POC graveyard with organisations not having the right levels of data governance in place to productionise AI initiatives effectively. Fifty-six percent of organisations also describe data reliability as a barrier to advancing GenAI pilots.2

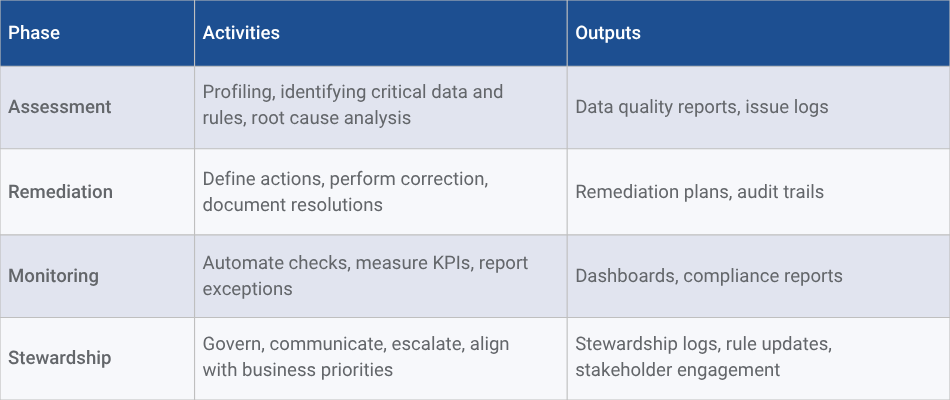

Best practices for continuous data quality management

Data quality is seen as a core component of data governance. In fact, they go hand in hand. Here are some tips to ensure you have the right processes for data profiling, monitoring, remediation and stewardship for the continuous management of your data quality.

Data Profiling

Purpose: Understand data content, structure and quality to identify anomalies and quality gaps early.

Key Practices:

- Use Aperture’s powerful data profiling capabilities to generate high-level statistics (nulls, outliers, patterns, format inconsistencies).

- Perform column, cross-column, and inter-table analyses to uncover relationships and anomalies.

- Drill-down and root cause hypothesis formulation for discovered issues.

- Use Aperture data profiling tools with visualisation, integrated metadata data catalogue repositories.

Best Practice: Start with a small, high-value CDEs (critical data elements), conduct iterative profiling aligned with business rules and expectations.

Data Quality Monitoring

Purpose: Continuously track and report on data quality to maintain trust and prevent degradation.

Key Practices:

- Define data quality metrics across dimensions (completeness, accuracy, timeliness, uniqueness, consistency).

- Integrate automated data quality rules into operational processes (e.g. DQ pipelines from source systems to CRMs, data lakehouses).

- Monitor quality trends and issue recurrence using dashboards and alerts.

- Report findings to data governance team for action and transparency.

Best Practice: Link monitoring to data quality SLAs and ensure alignment with business KPIs to demonstrate impact.

Data Remediation

Purpose: Identify and resolve root causes of data issues through structured corrective and preventive actions.

Key Practices:

- Automated correction (e.g. rule-based normalisation, standardisation, email, phone address verification).

- Manually directed correction with confidence scoring and steward oversight.

- Manual correction with audit trail when automation is infeasible or high risk.

- Maintain incident tracking systems documenting root cause, remediation actions, resolution times and decisions.

Best Practice: Remediate at the point of origin (i.e. source systems) and apply fixes to upstream systems to prevent recurrence. Use real-time ‘active’ remediation which is consistent with bulk remediation procedures.

Data Stewardship

Purpose: Ensure ongoing accountability for data quality, governance and compliance across the organisation.

Key Roles:

- Business Data Stewards: Define rules, validate issues, work with SMEs.

- Technical Data Stewards: Ensure metadata accuracy, manage transformations.

- Coordinating Stewards: Oversee collaboration across data domains.

Operational Responsibilities:

- Day-to-day quality oversight.

- Support profiling, rule creation and remediation.

- Communicate data issues and advocate improvements.

- Enforce governance policies and ensure SLA conformance.

Best Practice: Assign domain-based stewardship and include stewards in process redesign and rule lifecycle management.

Integrated Quality Framework

Experian’s Aperture and consultancy can support the full integrated quality framework for your organisation – Contact us to see how we can help.

Contact us

References:

Disclaimer: This content is provided by Experian Australia Limited (“Experian”) as general information and it is not (and does not contain any form of) professional, legal or financial advice. Experian makes no representations, warranties or guarantees that the information (including links and the views/opinions of authors and/or contributors) (“Information”) contained in this content is error free, accurate or complete. You are solely responsible and liable for any decision made (or not made) by you in connection with Information contained in this content. Experian (and its related bodes corporate) exclude all liability for any and all loss cost, expense, damage or claim incurred by a party as a result of or in connection with (whether directly or indirectly) this content or any reliance on the Information or links contained within. Experian owns (or has appropriate licences for) all intellectual property rights in this content and this content must not be edited, copied, updated or republished (whether in whole or in part) in any way without Experian’s prior written consent.